The main thing that started this quest was that I realized I don’t know how to code and AI-powered IDEs know a bit about how to code

But when they don’t have any supervision and they don’t have any context, when the file gets big, they start making errors and even I don’t know how to fix them.

So it will keep running in circles and I won’t even know what a circle is.

So I’m trying to give it the monitoring and feedback it needs so that it can help itself and get me to where I want.

Yes, so basically the problem is of continuity and scope.

By continuity, I mean that over time it forgets what it has done and why it has done it, and if it has strayed away from the path.

And about scope.

Because it doesn’t have that wide of a vision to look at the whole project, it will have blind spots due to context window

And that’s why it needs monitoring and feedback, and that’s what we are trying to do with our product.

It’s like it only sees the current balance sheet and that’s it.

No other financial statements, past or present.

It doesn’t know how we got there, what changed.

It just has this balance sheet in front of it and tries to do something with it.

Now lets say it reacted to this thing and took some action And maybe it might have also taken action in past.

It doesnt know what it did in the past, why it did it, and now it is staring at a new balance sheet and it is doing it all as if fresh encounter.

The model is an accountant who is permanently amnesic.

Every time you hand it a balance sheet, it can read the numbers, calculate ratios, maybe even optimize something

But it has no memory of how the company operated yesterday, what transactions led here, or which entries were deliberate temporary losses versus structural shifts.

So when it tries to “improve” the sheet, it often undoes past reasoning or repeats already-fixed mistakes.

That’s why first of all you need to track and map what it is doing .

Your mapping engine = the observatory

You extract: backend endpoints, frontend API calls, file locations, dependencies.

That’s a snapshot of the system’s structure.

If you run this before and after the AI edits code, you can compute what structurally changed—that’s a graph diff.

Example signal:

{

"new_endpoints": ["POST /api/tasks"],

"deleted_endpoints": ["GET /api/legacy_users"],

"modified_files": ["main.py", "App.js"],

"graph_drift_score": 0.27

}You feed that to the model: “Your last edit introduced 27% structural drift; review endpoints.”

It now has awareness of its own footprint.

Basically our purpose rots away slowly with AI.

It’s okay at the start, but as we go on with time and as we go on with scale, it just needs help to stay stuck to it.

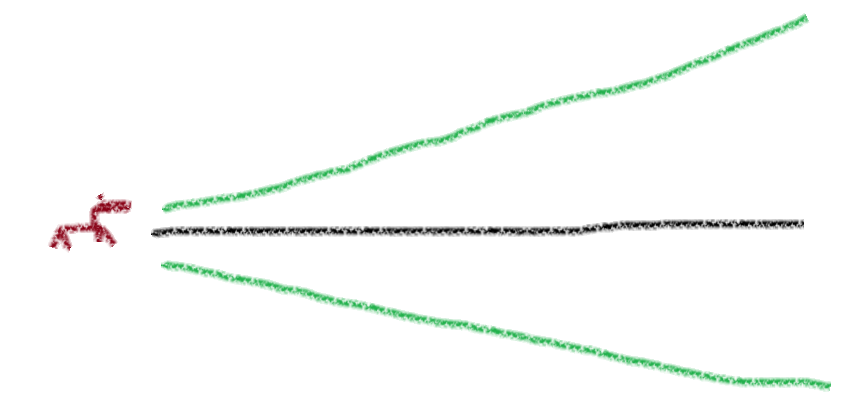

It’s like a horse which we wanna have running in a straight line

But as the distance increases The ground on which the horse is running becomes a cone that is expanding

The horse might just go off that straight line and wander around

That is what we are trying to stop.

We want the continuity and we want the scope to be proper.

We are trying to build the boundary rails that keep the horse running straight as the cone expands

By constantly reminding it where the centerline is.

The Core Problem: Divergence Under Scale

Temporal divergence: every iteration with time introduces small reasoning errors; without memory, they accumulate.

Contextual divergence: as code size and complexity grow, unseen dependencies amplify mistakes.

Intent drift: the model forgets the original objective and starts optimizing for local coherence (it writes code that “looks right” but isn’t aligned with purpose).

You can think of these as entropy sources.

Over time, they dissolve the original clarity of direction.

Purpose decays unless it’s continually reinforced by feedback.

Humans stay aligned because we remember why we started and we see the big picture.

AI doesnt have that and its my job to give it to it.

One of the key challenges is that you just cannot make 100% accurate judgment about the whole code base

Few things are deterministic in nature and few things require you to use heuristics to guess what they are

And we don’t want to stroke flames misguidance here.

I found my experience of trying to create a good heuristic systems to be very similar to me trying to create quantitative trading strategy.

And I overfit a trading strategy

Always

And that never ends well so that’s a pursuit that I’m not going to undertake

My mission is to make most of the certain information that I have and derive as much value as possible from it to stop the wastage of tokens, save people’s money and also help the planet by requiring less computation to get things done.

And i bet focusing on Continuity and Scope will give the most ROI